Reimagining the limits of optimisation

December 02, 2025

“I hope to contribute, together with my collaborators, to developing efficient optimisation algorithms that combine theoretical soundness with practical usefulness.”

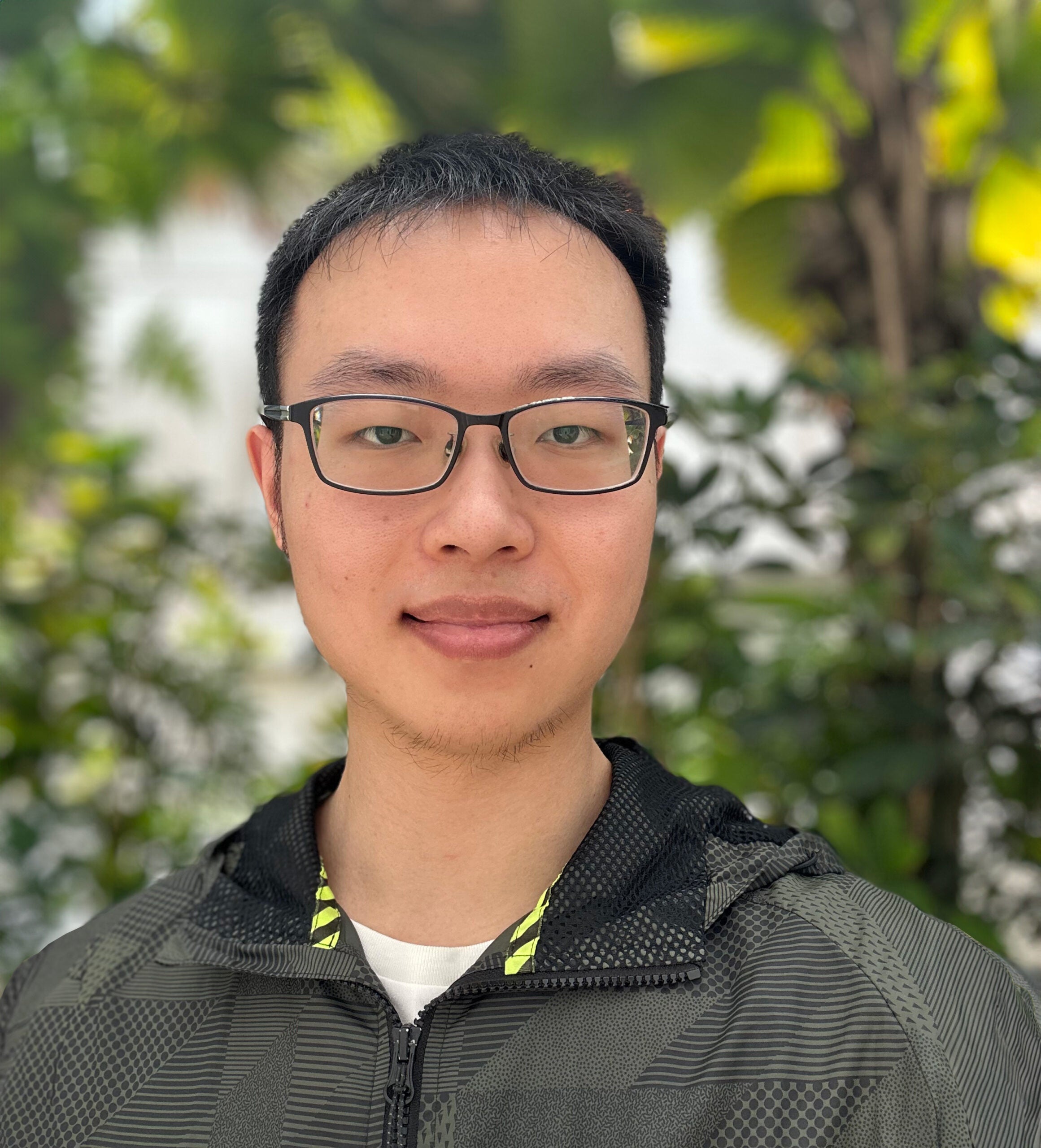

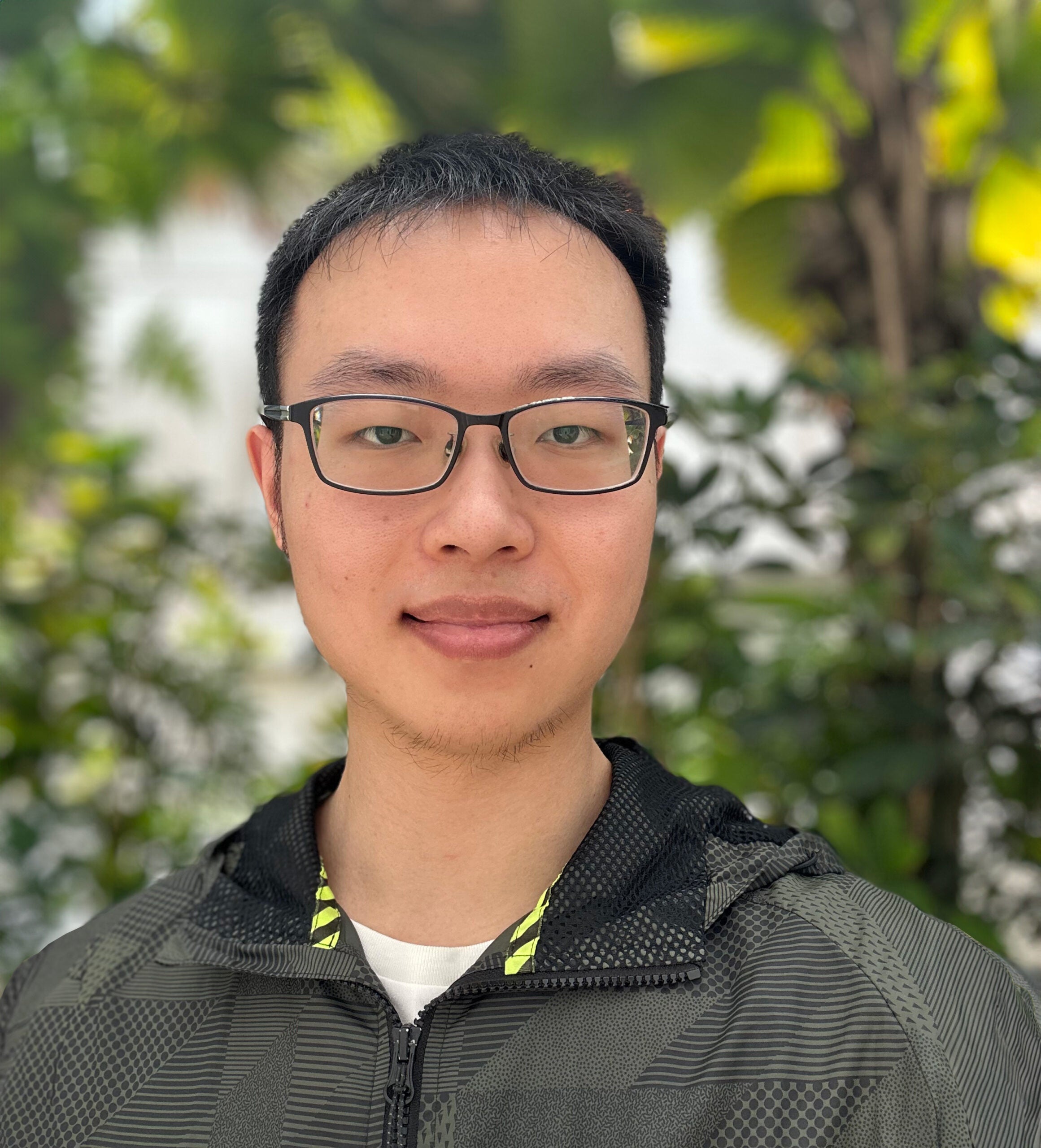

When Dr Tang Tianyun, a PhD alumnus from NUS Mathematics, learned he had received the prestigious Ivo and Renata Babuška Thesis Prize (2026), he saw this as an affirmation of the work he has pursued for years. The support of his advisors and collaborators, he says, also shaped every part of his PhD journey.

That journey began with a fascination with the versatility of mathematical optimisation: the idea that optimisation could bridge abstract theory and its translation into tools for real-world problem solving.

Under the mentorship of Prof Toh Kim-Chuan, a leading authority in optimisation, Tianyun was introduced to semidefinite programming (SDP), an advanced form of mathematical optimisation that can capture complex relationships and systems – subject to various constraints – arising in engineering, data science and operations research.

“I was drawn to SDPs for their ability to model a wide range of applications and to recover global solutions to problems that are otherwise non-convex,” he says.

But there was a catch. Despite their theoretical power, large-scale SDPs are computationally very costly, which limits their practical use. This constraint became the starting point for his research. The most impactful insight from his work, he says, was recognising that many real SDP problems contain hidden structures – patterns such as sparsity or low-rankness. By exploiting these simpler, low-rank structures, he reformulated these problems in a compact form, significantly reducing computational time and resources.

This work could reshape how researchers approach large computational models across disciplines. Tianyun says, “Many tasks in machine learning and data science can be formulated as SDPs. Our methods make it possible to solve these SDP models much more efficiently and to scale them to higher dimensions, which is crucial for modern large-scale datasets.”

The journey towards this goal, however, was far from straightforward. “Reducing the size makes the problem significantly more complex in other ways,” he says. The smaller formulation becomes non-convex – mathematically messy, with no easy guarantees of finding the best answer.

To navigate this complexity, Tianyun and his collaborators developed new strategies. They designed an adaptive rank-tuning scheme that helps algorithms work through the non-convex landscape and incorporated tools from Riemannian optimisation – a technique that deals with curved geometric spaces rather than flat ones. They also established conditions that ensure the mathematical “space” the algorithm works in behaves smoothly, which is crucial for good algorithmic performance.

Looking ahead, Tianyun is excited to apply SDP methods to statistics and scientific computing – problems rich with structural properties and ripe for algorithmic innovation.

And when the mathematics gets dense, as it inevitably does, he has learned how to keep perspective. “When I’m stuck for a long time, I shift my attention to another question,” he says. “Stepping away helps me stay motivated. Usually, I come back with fresh ideas.”

Find out more at American Mathematical Society News